Source: opencv

In camera calibration I presented how to call the camera_calibration ROS package to calibrate a pinhole camera (in my case). Now I want to understand the mathematics behind it. It looks a lot like the mathematics applied in camera backward projection.

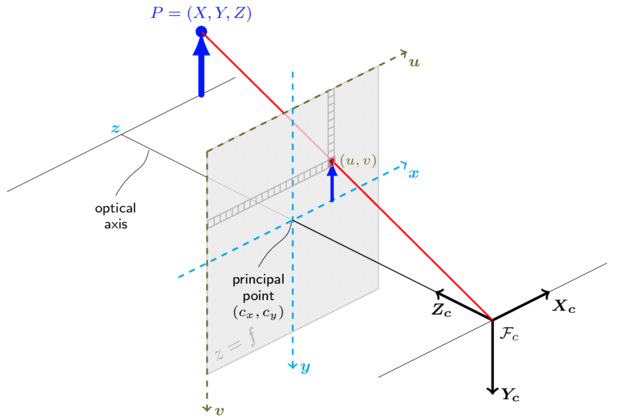

For pinhole camera model, a scene is formed by projecting 3D points into the image plane using a perspective transformation:

or

where:

- are the coordinates of a 3D point in the world coordinate space

- are the coordinates of the projection point in pixels

- is a camera matrix, or a matrix of intrinsic parameters

- is a principal point that is usually at the image center

- are the focal lengths expressed in pixel units.

Tip

If an image from the camera is scaled by a factor, all of these params should be scaled by the same factor! However, the intrinsic parameters do not depend on the scene viewed, so as long as the focal length is fixed, they can be re-used.

Similar to camera backward projection, the joint rotation of R and t describe the camera motion around a static scene (as litter is usually stationary in the ocean, as in on the bottom) and it translates coordinates of a point to a coordinate system, fixed with respect to the camera. When :

As lenses usually present distortion, the above model is extended as:

where,

The distortion vector contains , where and are radial distortion coefficients and and are tangential distortion coefficients.

Distortion coefficients are also intrinsic parameters

If a camera has been calibrated for images of , the same distortion coefficients can be used for images from the same camera while and need to be scaled appropriately.

I did something similar to this in my visual-odom implementation without the need to explicit the camera intrinsic parameters and I would deduce matrix based on affine transformations.