Sources: this incredibly annoying guy on youtube and explanation

What is LSTM?

It is a sequential NN (type of RNN) that allows information to persist over long periods of time. It is a good solution to RNN which would either explode or vanish in the Gradient Descent phase. What I mean by that is that a weight to any power, be it either

0.5or2would either get super close to 0 or to It excels in capturing long-term dependencies (like remembering the chapter 1 from a book) and is ideal for sequence predictions tasks.

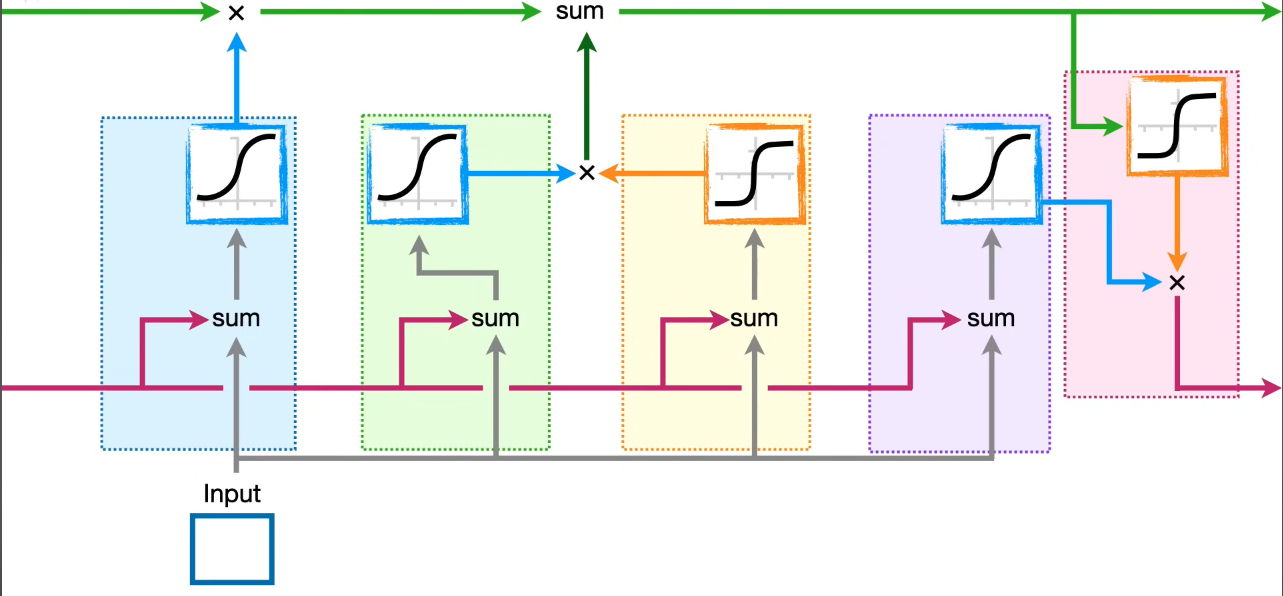

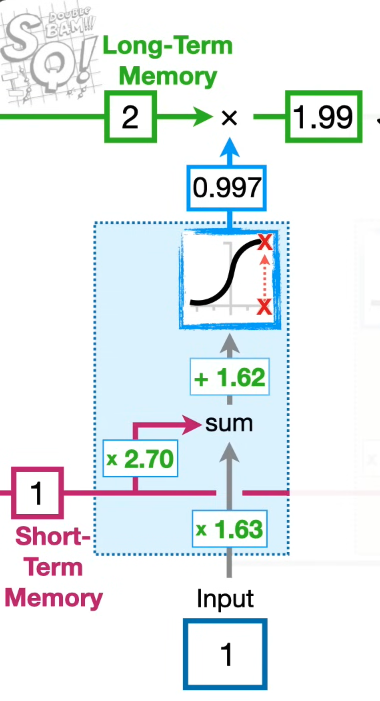

This algorithm uses 2 paths in order to predict the future state of some input (one for the long-term memory and one for the short-term).

Although the guy on youtube is terribly annoying, he has some pretty good explanations and figures. So I will explain based on those figures.

- The functions with BLUE are called

Sigmoid Activation Functionswhich transform any input into an output between [0, 1], . - The functions with ORANGE are called

Tanh Activations Functionswhich transform any input into an output between [-1, 1], . - The GREEN line is called the

CELL STATEand represent the Long-Term Memory. It is modified by a multiplication and an addition without any weights. That allows the memories to not explode or vanish. - The PURPLE line represents the

Short-Term Memoriesand have weights attached to them. - The weight right before the functions are called

Bias

Again, this guy has some really, really good visuals to explain all this.

This first stage is usually called the Forget Gate.

The next 2 blocks create a Potential Memory and denote how much of it contributes to the Long-Term Memory. This stage is usually called the Input Gate.

The final 2 blocks represent the output of the LSTM and this stage is called the Output Gate.